Sound & music

computing

iDDY - an Interactive, Capacitive & Non-Linear

Instrument

This thesis introduces iDDY, a novel digital musical instrument (DMI) that combines nonlinear dynamics with capacitive touch sensing to enable expressive sound control. At its core is a Duffing oscillator—a nonlinear system capable of producing complex and chaotic behaviors—coupled with an 8-band filterbank for real-time spectral shaping. Gestural input from capacitive sensors, including the Trill Bar and Trill Hex, allows performers to intuitively navigate between stable and chaotic sonic states. Designed for live performance, improvisation, and experimental sound creation, iDDY supports rich, nuanced sound manipulation. The research investigates mapping strategies, evaluates playability through autobiographical design methods (ABD), and demonstrates how controlled chaos can enhance musical expressivity. These findings contribute to DMI design by showing how chaotic systems can be effectively harnessed for dynamic, real-time musical interaction.

High-density fiberboard

21x Diode LEDs

12 x 12 Copper wired grid

4 x SPST Push Buttons

8X 10k Slide Potentiometers

Instrument

This thesis introduces iDDY, a novel digital musical instrument (DMI) that combines nonlinear dynamics with capacitive touch sensing to enable expressive sound control. At its core is a Duffing oscillator—a nonlinear system capable of producing complex and chaotic behaviors—coupled with an 8-band filterbank for real-time spectral shaping. Gestural input from capacitive sensors, including the Trill Bar and Trill Hex, allows performers to intuitively navigate between stable and chaotic sonic states. Designed for live performance, improvisation, and experimental sound creation, iDDY supports rich, nuanced sound manipulation. The research investigates mapping strategies, evaluates playability through autobiographical design methods (ABD), and demonstrates how controlled chaos can enhance musical expressivity. These findings contribute to DMI design by showing how chaotic systems can be effectively harnessed for dynamic, real-time musical interaction.

High-density fiberboard

21x Diode LEDs

12 x 12 Copper wired grid

4 x SPST Push Buttons

8X 10k Slide Potentiometers

2x Trill Hex

2x Trill Bar

2x Trill Bar

Powered by Bela Beagle Bone

RNBO/ Pure Data /C++

RNBO/ Pure Data /C++

Tela Modal Synthesizer - Fors

Tela is a modal synthesis-based instrument with “ratcheting impulse generator and/or variable grain generator with bendable tonality and shapeable contour control”. With upwards to 16 voice poliphony with one LFO parameter page, mono legato mode, and patch randomizer, Tela orchestrates a vast array of resonant peaks, intertwined to form a critical mass of sound. It models nothing – it's a synthesizer for the here and now, to discover and create anew.

Beta tester

Presets

more info: https://fors.fm/tela

Tela is a modal synthesis-based instrument with “ratcheting impulse generator and/or variable grain generator with bendable tonality and shapeable contour control”. With upwards to 16 voice poliphony with one LFO parameter page, mono legato mode, and patch randomizer, Tela orchestrates a vast array of resonant peaks, intertwined to form a critical mass of sound. It models nothing – it's a synthesizer for the here and now, to discover and create anew.

Beta tester

Presets

more info: https://fors.fm/tela

A Handful of Grains

This project introduces an innovative approach to reevaluating the input-output framework of a granular synthesizer by employing sensorimotor data acquisition from the user's hand utilizing a Leap Motion controller. Drawing inspiration from theories of embodied cognition and computable expressive descriptors of human motion, users can cultivate heightened relationships between sound and gesture. This is achieved through an associative learning process facilitated by the deliberate manipulation of an action-perception loop in real-time.

Leap Motion Controller

Left and/or right hand

Max 8

This project introduces an innovative approach to reevaluating the input-output framework of a granular synthesizer by employing sensorimotor data acquisition from the user's hand utilizing a Leap Motion controller. Drawing inspiration from theories of embodied cognition and computable expressive descriptors of human motion, users can cultivate heightened relationships between sound and gesture. This is achieved through an associative learning process facilitated by the deliberate manipulation of an action-perception loop in real-time.

Leap Motion Controller

Left and/or right hand

Max 8

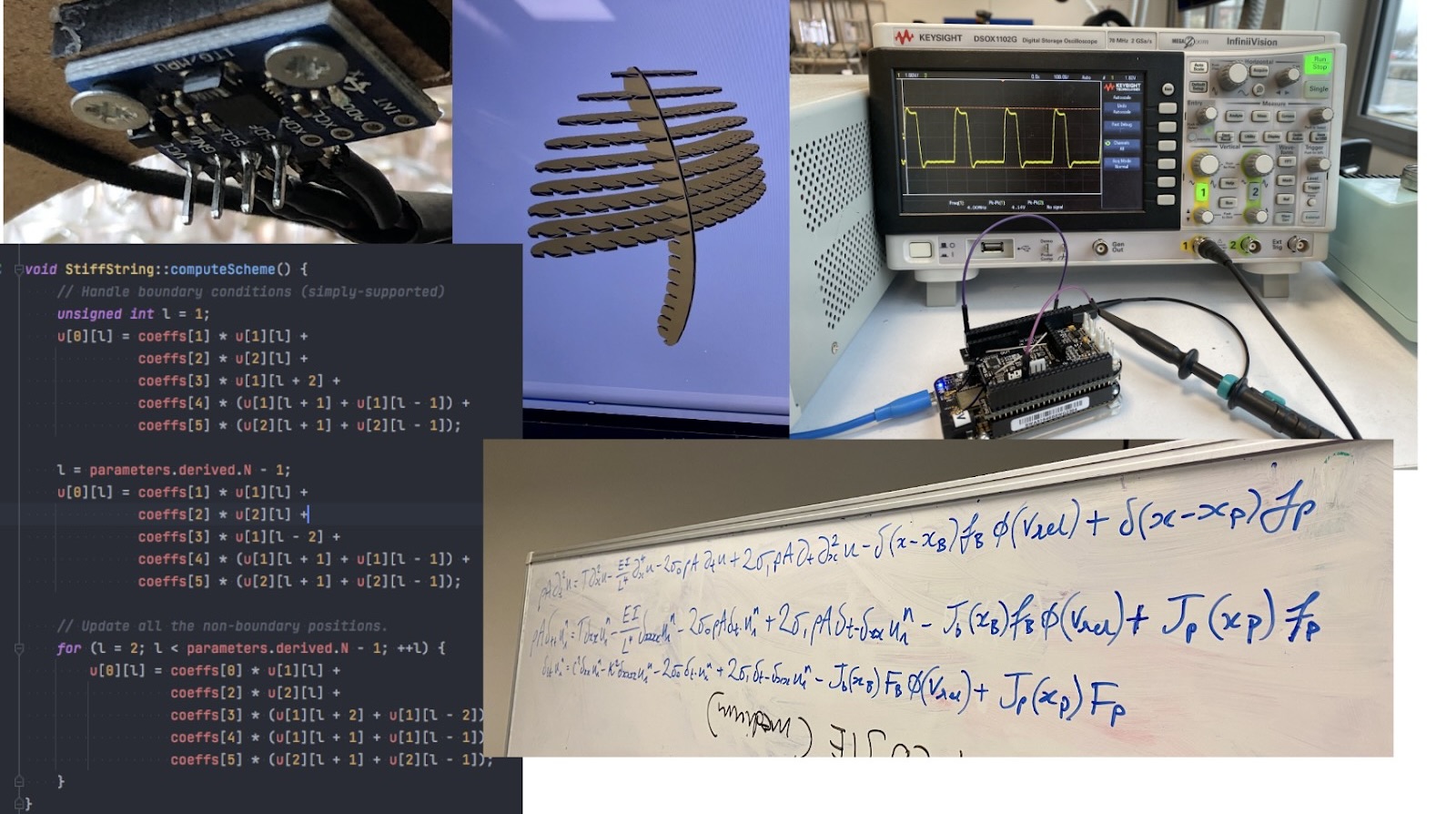

Kuplen - A Hands-On Physical Model

Kuplen represents a wholly integrated digital musical instrument featuring a tactile, tiltable surface intricately linked to the parameters of a physical modeling synthesis sound engine. The inherent physical model involves a stiff string mechanism, activated by a bow incorporating a movable damping element, capable of generating diverse pitches and timbral characteristics.

User feedback indicates a keen interest in investigating the expressive potential of the instrument. The incorporation of free-form modes of interaction, coupled with established higher-level descriptor mapping strategies, renders Kuplen a platform that encourages users to embark on innovative sonic explorations. This engagement facilitates gestural control within an exploratory framework centered around the physical model.

Kuplen represents a wholly integrated digital musical instrument featuring a tactile, tiltable surface intricately linked to the parameters of a physical modeling synthesis sound engine. The inherent physical model involves a stiff string mechanism, activated by a bow incorporating a movable damping element, capable of generating diverse pitches and timbral characteristics.

User feedback indicates a keen interest in investigating the expressive potential of the instrument. The incorporation of free-form modes of interaction, coupled with established higher-level descriptor mapping strategies, renders Kuplen a platform that encourages users to embark on innovative sonic explorations. This engagement facilitates gestural control within an exploratory framework centered around the physical model.

Acrylic plastic

High-density fiberboard

12 x 12 Copper wired grid

144 x Copper tape squares

Elastic rubber strings

High-density fiberboard

12 x 12 Copper wired grid

144 x Copper tape squares

Elastic rubber strings

1x GY-521 IMU Sensor

1x Trill Craft Capacitive Touch Sensor

1x Trill Craft Capacitive Touch Sensor

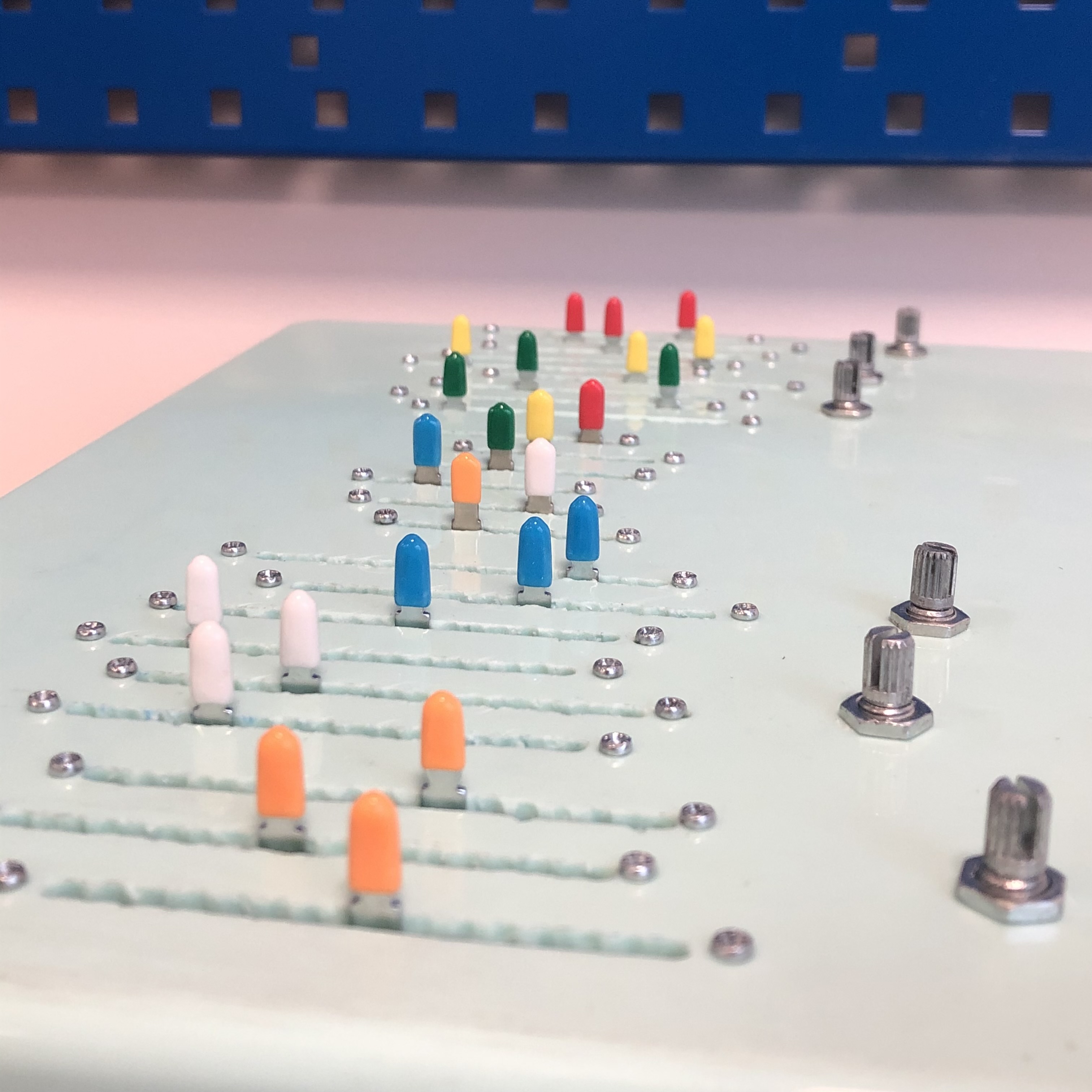

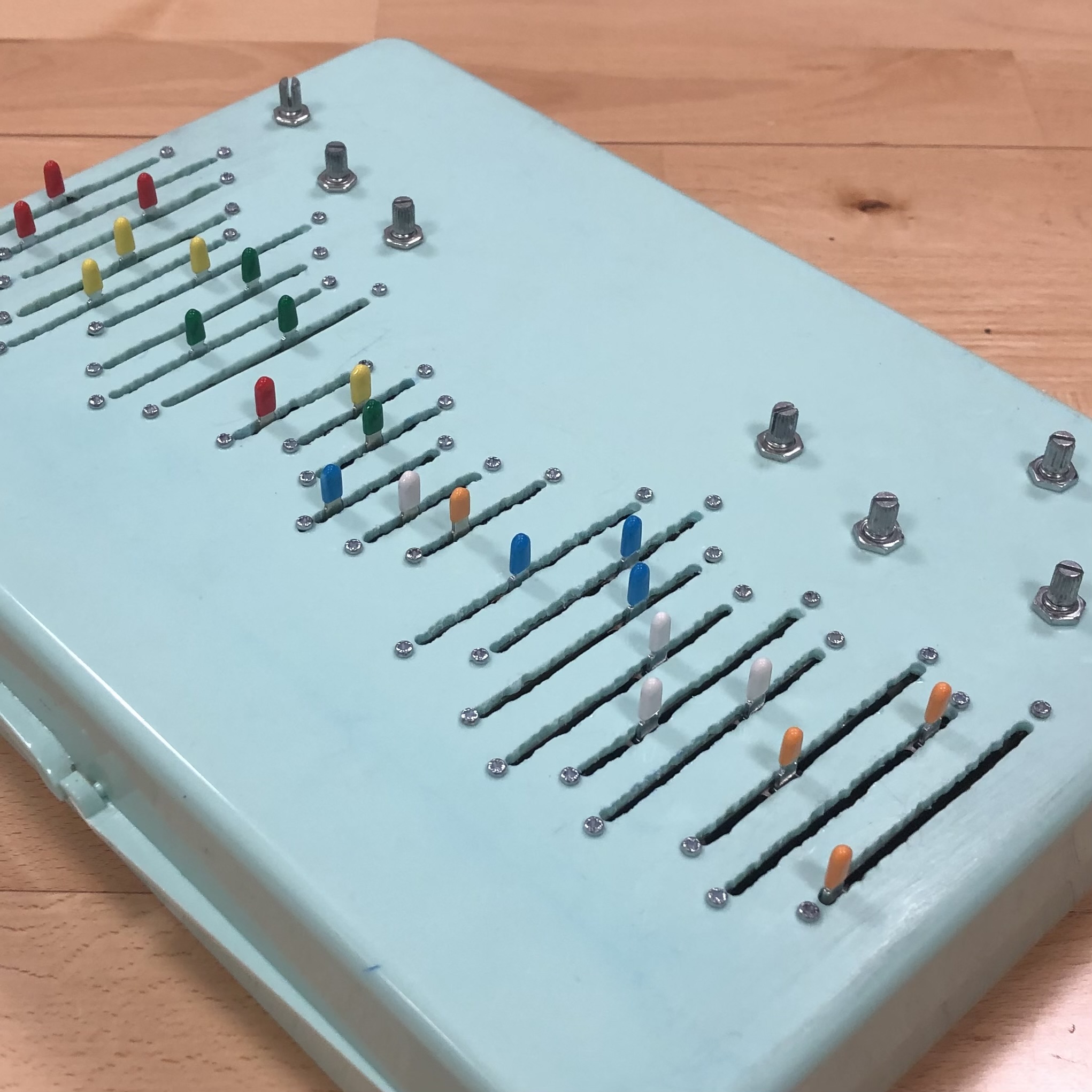

IDDY - A Haptically-Driven Instrument for Textures, (Ir)regular Syncopated Rhythms, and (A)tonal Sequences

IDDY is a synthesizer comprised of six pairs of resonant low-pass filters, activated by six corresponding variable-periodic impulses. The interface, manipulable by fingertip control, establishes a coupling mechanism between haptic feedback and auditory output through the utilization of micro-vibrational motors and force-sensitive resistors. These components align with the temporal periodicity inherent in each filter voice's pinging events.

Originating from a perspective rooted in sound design and improvisational/compositional considerations, the synthesizer delves into the exploration of multi-timbral capabilities arising from the activation of resonant filters through pinging. The discernible outcomes encompass varied textures, syncopated rhythms, and sequenced resonant (self-oscillated) pure tones. This synthesis technique facilitates the creation of organic sound structures, while the integration of haptic and auditory senses aims to propose a novel paradigm for both digital instruments and their controls.

(with additional reverb & delay)

IDDY is a synthesizer comprised of six pairs of resonant low-pass filters, activated by six corresponding variable-periodic impulses. The interface, manipulable by fingertip control, establishes a coupling mechanism between haptic feedback and auditory output through the utilization of micro-vibrational motors and force-sensitive resistors. These components align with the temporal periodicity inherent in each filter voice's pinging events.

Originating from a perspective rooted in sound design and improvisational/compositional considerations, the synthesizer delves into the exploration of multi-timbral capabilities arising from the activation of resonant filters through pinging. The discernible outcomes encompass varied textures, syncopated rhythms, and sequenced resonant (self-oscillated) pure tones. This synthesis technique facilitates the creation of organic sound structures, while the integration of haptic and auditory senses aims to propose a novel paradigm for both digital instruments and their controls.

Repurposed turquoise box

24x rubber color slide caps

24x 10kΩ linear sliders

24x rubber color slide caps

24x 10kΩ linear sliders

7x

10kΩ Ohm logarithmic potentiometers

3x 16

channel CD74HC4067 analog MUXs

Teensy 4.1 Microcontroller

Powered by Faust

Powered by Faust

~ ~ ~ ~

(with additional reverb & delay)

Implementation of a Spring Reverberation

Model in MATLAB

Application of a spring reverberation modelling technique proposed by Välimäki, Parker et al.

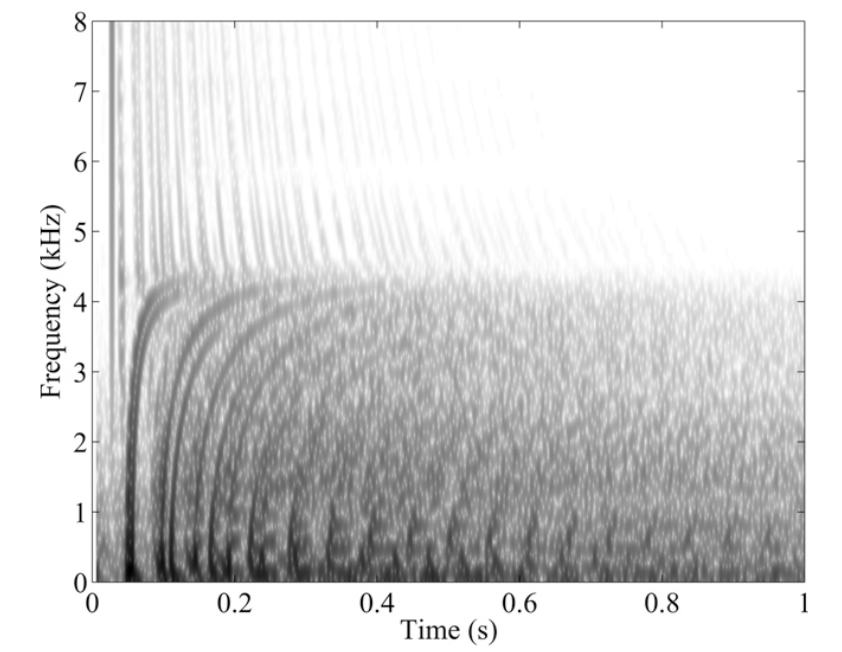

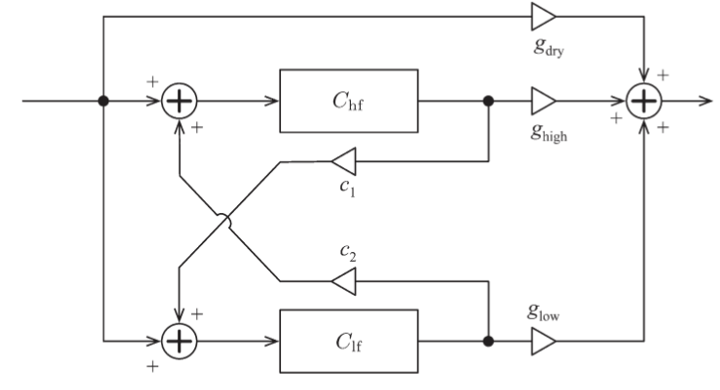

The process of the model was conducted through observations and analysis of recorded impulse responses from two spring reverberation tanks – a Leem Pro KA-1210 and a Sansui RA-700. Based on characteristics presented in these responses, their spectrograms both displayed a similar basis, as follows: a sequence of decaying chirp-like pulses progressively blurred over time into a reverberant tail. In the digital signal processing, a modelling of the latter can thereby be implemented using techniques familiar from other forms of digital reverberation effects: a spectral delay filter, comprised of a cascade of identical all-pass filters, with a temporal smearing and attenuation of the signal, using a modulated multi-tap delay line in a feedback loop.

paper/code: https://github.com/gabi-blip/Implementation-of-a-Spring-Reverberation-Model-in-MATLAB

Model in MATLAB

Application of a spring reverberation modelling technique proposed by Välimäki, Parker et al.

The process of the model was conducted through observations and analysis of recorded impulse responses from two spring reverberation tanks – a Leem Pro KA-1210 and a Sansui RA-700. Based on characteristics presented in these responses, their spectrograms both displayed a similar basis, as follows: a sequence of decaying chirp-like pulses progressively blurred over time into a reverberant tail. In the digital signal processing, a modelling of the latter can thereby be implemented using techniques familiar from other forms of digital reverberation effects: a spectral delay filter, comprised of a cascade of identical all-pass filters, with a temporal smearing and attenuation of the signal, using a modulated multi-tap delay line in a feedback loop.

paper/code: https://github.com/gabi-blip/Implementation-of-a-Spring-Reverberation-Model-in-MATLAB

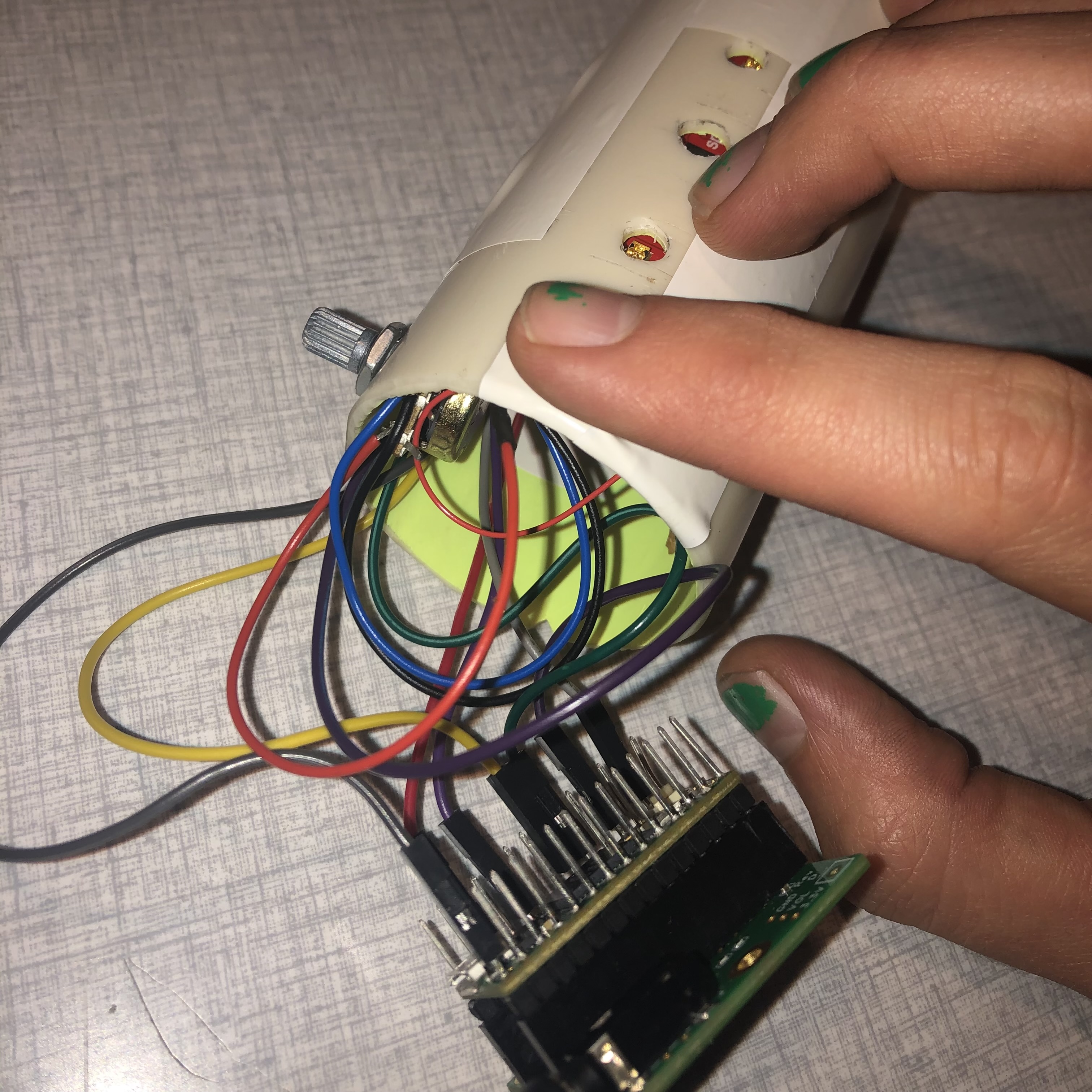

P_01 - Build an instrument in a week - Faust workshop with Romain Michon (CCRMA)

I

I

I

I

I

I

I

I

I

I

I

I

I

I

Repurposed plastic tube

ZX Distance and Gesture Sensor Sparkfun 13162

ZX Distance and Gesture Sensor Sparkfun 13162

10kΩ Ohm logarithmic potentiometers

Teensy 4.1 Microcontroller

Powered by Faust

Powered by Faust